| This document is very much a work in progress. |

1. Intro

c-xrefactory is a software tool and a project aiming at restoring the sources of that

old, but very useful, tool to a state where it can be enhanced and be the foundation for

a highly capable refactoring browser.

It is currently in excellent working condition, so you can use it in your daily work. I do. For information about how to do that, see the README.md.

1.1. Caution

As indicated by the README.md, this is a long term restoration project. So anything you find in this document might be old, incorrect, guesses, temporary holders for thoughts or theories. Or actually true and useful.

Especially names of variables, functions and modules is prone to change as understanding of them increases. They might also be refactored into something entirently different.

This document has progressed from non-existing, to a collection of unstructured thougths, guesses, historic anecdotes, ideas and a collection of unstructured, pre-existing, wiki pages, and is now quite useful. Perhaps it will continue to be improved and "refactored" into something valuable for anyone who venture into this project.

The last part of this document is an Archive where completely obsolete descriptions have been moved for future software archeologists to find.

1.2. Background

You will find some background about the project in the README.md.

This document tries to collect the knowledge and understanding about how c-xrefactory

actually works, plans for making it better, both in terms of working with the source,

its structure and its features.

Hopefully over time this will be the design documentation of c-xrefactory, which, at

that time, will be a fairly well structured and useful piece of software.

1.3. Goal

Ultimately c-xrefactory could become the refactoring browser for C, the one that

everybody uses. As suggested by @tajmone in GitHub issue #39, by switching to a general

protocol, we could possibly plug this in to many editors.

However, to do that we need to refactor out the protocol parts. And to do that we need a better structure, and to dare to change that, we need to understand more of the intricacies of this beast, and we need tests. So the normal legacy code catch 22 applies…

Test coverage is starting to look good, coming up to slightly above 80% at the time of writing this. Many "tests" are just "application level" execution, rather than actual tests, but also this is improving.

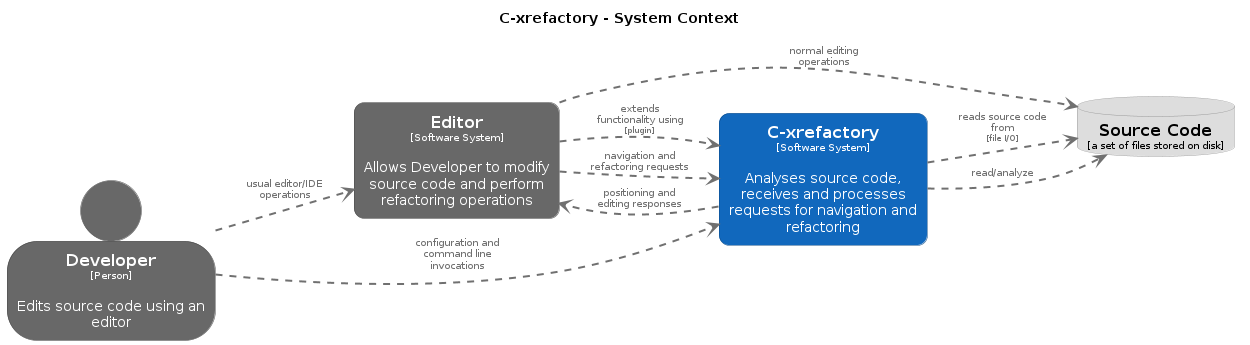

2. Context

c-xrefactory is designed to be an aid for programmers as they write,

edit, inspect, read and improve the code they are working on.

The editor is used for usual manual manipulation of the source

code. C-xrefactory interacts with the editor to provide navigation

and automated edits, refactorings, through the editor.

3. Functional Overview

The c-xref program is, or rather was, a mish-mash of a multitude of features baked into one program. This is the major cause of the mess that it is source-wise.

It was

-

a generator for persistent cross-reference data

-

a reference server for editors, serving cross-reference, navigational and completion data over a protocol

-

a refactoring server (the worlds first to cross the Refactoring Rubicon)

-

an HTML cross-reference generator (probably the root of the project) (REMOVED)

-

a C macro generator for structure fill (and other) functions (REMOVED)

It is the first three that are unique and constitutes the great value of this project. The last two have been removed from the source, the last one because it was a hack and prevented modern, tidy, building, coding and refactoring. The HTML cross-reference generator has been superseeded by modern alternatives like Doxygen and is not at the core of the goal of this project.

One might surmise that it was the HTML-crossreference generator that

was the initial purpose of what the original Xrefactory was based

upon. Once that was in place the other followed, and were basically

only bolted on top without much re-architecting the C sources.

What we’d like to do is partition the project into separate parts, each having a clear usage.

The following sections are aimed at describing various features of

c-xrefactory.

3.1. Main functionality

A programmer constantly needs to navigate, understand and improve the source code in order to lessen the cognitive load for understanding and making changes.

c-xrefactory provides two sets of functions for this directly from

within the editor

-

navigation, searching and browsing symbols

-

automated refactorings, i.e. non-behaviour changing edits

C and Yacc source code is supported.

3.1.1. Navigation

A user can navigate all references of a symbol, limited to the semantic scope of that symbol, by "Goto Definition" and then navigate using "Next/previous Reference". This is a fast way to inspect where a symbol is used.

| This also applies to non-terminals and semantic attributes in Yacc grammars! |

3.1.2. Browsing

It is also possible to search for definitions or symbols containing a string, including possible wildcards. This will present various forms of "menues" where filters can be applied and a found symbol be inspected.

3.1.3. Completion

As c-xrefactory have information about symbols and their semantic scope,

it can also provide semantically informed completions and suggestions.

3.1.4. Automated refactorings

In his book "Refactoring" Martin Fowler describes a large number of refactorings, changes to source code that does not change the behaviour, but improves it structure and readability. For each refactoring they describe step by step which edits to make to apply the refactoring manually.

The natural next step was of course to attempt to automate this in editors or IDEs, which started to happen.

In an article from 2001 Martin pronounces Xref, the ancestor to c-xrefactory, to be

the first tool to cross "Refactorings Rubicon", being able to extract a function

semantically correct.

The term "automated" means that some software can examine the source

code and quickly and safely modify it using patterns from the list of

possible refactorings, without user interaction. Many refactorings in

the book, and on the website, are applicable mostly for OO-languages,

but many also apply to C. c-xrefactory can perform some of

them. More are considered for implementation.

-

"Rename Symbol" - change the name of a variable, type, function only for the semantic scope of the symbol

-

"Extract Macro/Function" - a region of the code can be extracted to a new function or macro

-

"Organize Includes" - clean up a list of #include directives by particioning and sorting them

-

"Rename Included File" - rename the file in the #include directive and update all other #include directives of that file

-

"Move Function To Other File" - move a function to another file, automatically add an extern declaration in an appropriate header file and ensure that is included in the file where the function originally was

Using these automated refactorings it is much easier and safer to continuously maintain and improve the quality of any code base.

3.2. Options, option files and configuration

The current version of C-xrefactory allows only two possible sets of configuration/options.

The primary storage is (currently) the file $HOME/.c-xrefrc

which stores the "standard" options for all projects. Each project has

a separate section which is started with a section marker, the project

name surrounded by square brackets, [project1].

When you start c-xref you can use the command line option -xrefrc

to request that a particular option file should be used instead of the

"standard options".

When running the edit server there seems to be no way to

indicate a different options files for different

projects/files. Although you can start the server with -xrefrc you

will be stuck with that in the whole session and for all projects.

|

3.3. LSP

The LSP protocol is a common protocol for language servers such as

clangd and c-xrefactory. It allows an editor (client) to interface

to a server to request information, such as reference positions, and

operations, such as refactorings, without knowing exactly which server

it talks to.

Recent versions of c-xrefactory have an initial implementation of a

very small portion of the LSP protocol. The plan is to fully integrate

the functionality of c-xrefactory into the LSP protocol. This will

allow use of c-xrefactory from not only Emacs but also Visual Studio

Code or any other editor that supports the LSP protocol.

3.3.1. LSP Protocol Limitations

The LSP protocol was designed for single-shot, non-interactive operations. This creates constraints for c-xrefactory’s advanced refactorings:

Interactive Refactorings: C-xrefactory’s extract/parameter operations

require multi-step user input (names, positions, declarations). LSP’s

textDocument/codeAction doesn’t support interactive dialogs.

Symbol Browsing: C-xrefactory provides interactive symbol browsers with filtering and keyboard navigation. LSP returns flat reference lists with no standard for interactive UI.

Strategy: The LSP implementation aims to:

-

Provide basic IDE features (definition, completion, simple refactorings) to modern editors

-

Expose c-xrefactory’s advanced refactoring capabilities where possible

-

Keep the Emacs client as the primary interface for full interactive features

LSP serves to make c-xrefactory more accessible while the Emacs client probably will remain the gateway to its complete refactoring power.

4. Quality Attributes

The most important quality attributes are

-

correctness - a refactoring should never alter the behaviour of the refactored code

-

completness - no reference to a symbol should ever be missed

-

performance - a refactoring should be sufficiently quick so the user keeps focus on the task at hand

5. Constraints

TBD.

6. Principles

6.1. Reference Database and Parsing

The reference database is used only to hold externally visible identifiers to ensure that references to an identifier can be found across all files in the used source.

All symbols that are only visible inside a unit is handled by reparsing the file of interest.

6.2. TBD.

7. Software Architecture

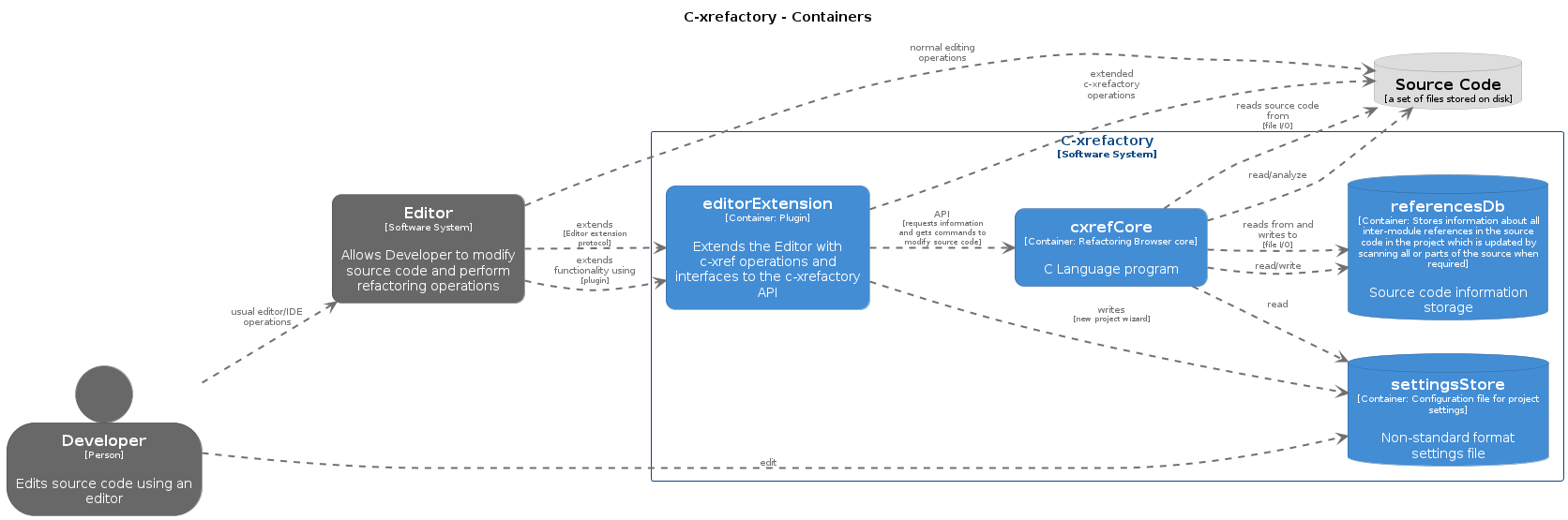

7.1. Container View

7.2. Containers

At this point the description of the internal structure of the containers are tentative. The actual interfaces are not particularly clean, most code files can and do include much every other module.

| One focus area for the ongoing work is to try to pry out modules/components from the code mess by moving functions around, renaming and hiding functions, where possible. |

7.2.1. CxrefCore

cxrefCore is the core container. It does all the work when it comes

to finding and reporting references to symbols, communicating

refactoring requests as well as storing reference information for

longer term storage and caching.

Although c-xref can be used as a command line tool, which can be

handy when debugging or exploring, it is normally used in "server"

mode. In server mode the communication between the editor extension

and the cxrefCore container is a back-and-forth communication using a

non-standard protocol over standard pipes.

The responsibilities of cxrefCore can largely be divided into

-

parsing source files to create, maintain the references database which stores all inter-module references

-

parsing source files to get important information such as positions for a functions begin and end

-

managing editor buffer state (as it might differ from the file on disc)

-

performing symbol navigation

-

creating and serving completion suggestions

-

performing refactorings such as renames, extracts and parameter manipulation

At this point it seems like refactorings are performed as separate

invocations of c-xref rather than through the server interface.

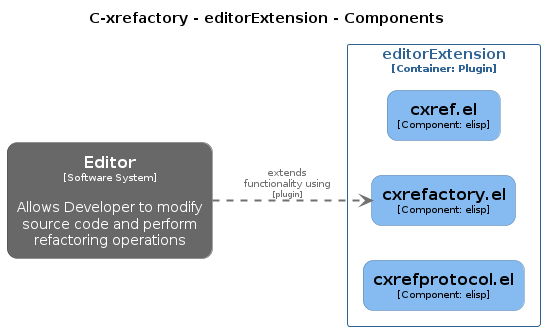

7.2.2. EditorExtension

The EditorExtension container is responsible for plugging into an

editor of choice and handle the user interface, buffer management and

executing the refactoring edit operations.

Currently there is only one such extension supported, for Emacs,

although there existed code, still available in the repo history, for

an extension for jEdit which hasn’t been updated, modified or checked

for a long time and no longer is a part of this project.

7.2.3. ReferencesDB

The References database stores crossreferencing information for symbols visible outside the module it is defined in. Information about local/static symbols are not stored but gathered by parsing that particular source file on demand.

Currently this information is stored in a somewhat cryptic, optimized text format.

This storage can be divided into multiple files, probably for faster access. Symbols are then hashed to know which of the "database" files it is stored in. As all crossreferencing information for a symbol is stored in the same "record", this allows reading only a single file when a symbol is looked up.

8. Code

8.1. Commands

The editorExtension calls the server using command line

options. These are then converted to first a command enum starting in

OLO ("on-line operation") or AVR ("available refactoring").

Some times the server needs to call the crossreferencer which is

performed in the same manner, command line options, but this call is

internal so the wanted arguments are stored in a vector which is

passed to the xref() in the same manner as main() passes the

actual argc/argv.

Many of the commands require extra arguments like positions/markers

which are passed in as extra arguments. E.g. a rename requires the

name to rename to which is sent in the renameto= option, which is

argparsed and stored in the option structure.

Some of these extra arguments are fairly random, like -olcxparnum=

and -olcxparnum2=. This should be cleaned up.

A move towards "events" with arguments would be helpful. This would mean that we need to:

-

List all "events" that

c-xrefneed to handle -

Define the parameters/extra info that each of them need

-

Clean up the command line options to follow this

-

Create event structures to match each event and pass this to

server,xrefandrefactory -

Rebuild the main command loop to parse command line options into event structures

8.2. Passes

c-xrefactory makes it possible to parse the analyzed source multiple passes in case

you compile the project sources with different C defines. In the project configuration

file you specify `-passN' followed by the settings, typically C PreProcessor defines,

that are to be applied for this pass over the sources.

8.3. File Processing Orchestration

The file processing architecture differs significantly between Server mode, Xref mode, and LSP mode, with confusing global state and naming inconsistencies that make the code hard to follow.

8.3.1. File Scheduling - How Files Get Marked for Processing

All modes begin by marking files for processing using the isScheduled flag in the file table.

Initial Scheduling (All Modes)

Called from:

-

Server:

initServer()→processFileArguments()[server.c:151] -

Xref:

mainTaskEntryInitialisations()→processFileArguments()[startup.c:706]

Flow:

processFileArguments() [options.c:1893]

│

└─> FOR each file in options.inputFiles

│

└─> processFileArgument(filename) [options.c:1864]

│

└─> dirInputFile(...) [options.c:465]

├─ If directory: recursively map over files

├─ If file: scheduleCommandLineEnteredFileToProcess(filename)

│ └─ SETS: fileItem->isScheduled = true [line 450]

└─ If wildcard: expand and recurseResult: All command-line files (and directory contents if -r flag) are marked with isScheduled = true.

Additional Update Scheduling (Xref Mode Only)

Called from: callXref() → scheduleModifiedFilesToUpdate() [xref.c:296]

Flow:

scheduleModifiedFilesToUpdate(isRefactoring) [xref.c:207]

│

├─> mapOverFileTable(schedulingToUpdate, isRefactoring)

│ └─ For each file: if modified, SETS: fileItem->scheduledToUpdate = true

│

├─> If UPDATE_FULL: makeIncludeClosureOfFilesToUpdate()

│ └─ Expands scheduledToUpdate to include all files that #include updated files

│

└─> mapOverFileTable(schedulingUpdateToProcess)

└─ For each file: if (scheduledToUpdate && isArgument)

SETS: fileItem->isScheduled = true [line 115]Result: In update mode, only modified files (and their includers) get isScheduled = true.

8.3.2. Server Mode Flow

SINGLE FILE PER REQUEST - Server processes one file per request, but all files are initially scheduled by processFileArguments() in initServer().

initServer(args) [server.c:148]

├─> processOptions(args, ...)

├─> processFileArguments() ← SCHEDULES ALL FILES

└─ Sets up completions

server() [server.c:237] - Infinite request loop

│

└─> FOR EACH REQUEST:

│

└─> callServer(baseArgs, requestArgs, &firstPass) [server.c:214]

│

├─> prepareInputFileForRequest() [server.c:95]

│ ├─ Gets first scheduled file (local check only)

│ ├─ SETS: requestFileNumber = NO_FILE_NUMBER [line 104] (if no file)

│ └─ SETS: requestFileNumber = fileNumber [line 121] (if file found)

│

└─> processFile(baseArgs, requestArgs, &firstPass) [server.c:199]

├─ SETS: inputFileName = fileItem->name [line 205]

│

└─> singlePass(args, nargs, &firstPass) [server.c:155]

│

├─> initializeFileProcessing(args, nargs, &firstPass) [startup.c:490]

│ ├─ READS: fileName = inputFileName [line 502]

│ ├─ USES: parsingConfig.fileNumber = currentFile.characterBuffer.fileNumber [line 161]

│ │

│ └─> computeAndOpenInputFile(inputFileName) [startup.c:112]

│ ├─ Gets EditorBuffer or opens file

│ │

│ └─> initInput(inputFile, inputBuffer, "\n", fileName) [yylex.c]

│ └─ Sets up currentFile global with CharacterBuffer

│

├─> parseInputFile() [server.c:131]

│ ├─ USES: currentFile.fileName [line 133]

│ ├─ Calls setupParsingConfig(requestFileNumber) [line 136]

│ │

│ └─> callParser(parsingConfig.fileNumber, parsingConfig.language) [line 140]

│

└─> SPECIAL CASE: Completion in macro body [lines 183-196]

├─ SETS: inputFileName = getFileItemWithFileNumber(...)->name [line 189]

├─> initializeFileProcessing(args, nargs, &firstPass) [again]

└─> parseInputFile() [again]8.3.3. Xref Mode Flow

PROCESSES ALL SCHEDULED FILES - Xref creates a list of all scheduled files and processes them in a loop.

xref(args) [xref.c:354]

│

└─> callXref(args, isRefactoring) [xref.c:283]

│

├─> IF options.update:

│ └─> scheduleModifiedFilesToUpdate(isRefactoring) [line 296]

│ └─ Adds modified files to scheduled list

│

├─> fileItem = createListOfInputFileItems() [line 298]

│ └─ Creates linked list of ALL scheduled files (sorted by directory)

│

└─> FOR LOOP over fileItem list [line 314]

│

└─> oneWholeFileProcessing(args, fileItem, &firstPass, ...) [xref.c:179]

├─ SETS: inputFileName = fileItem->name [line 181]

│

└─> processInputFile(args, &firstPass, &atLeastOneProcessed) [xref.c:149]

│

├─> initializeFileProcessing(args, nargs, &firstPass) [startup.c:490]

│ ├─ READS: fileName = inputFileName [line 502]

│ │

│ └─> computeAndOpenInputFile(inputFileName) [startup.c:112]

│ └─> initInput(inputFile, inputBuffer, "\n", fileName) [yylex.c]

│ └─ Sets currentFile.characterBuffer.fileNumber

│

├─ SETS: parsingConfig.fileNumber = currentFileNumber [line 160]

│ (NOTE: currentFileNumber is DIFFERENT global!)

│

└─> parseToCreateReferences(inputFileName) [parsing.c:165]

├─ Gets EditorBuffer using fileName parameter

│

├─> initInput(NULL, buffer, "\n", fileName) [line 181]

│ └─ DUPLICATE call! Already called in initializeFileProcessing!

│

├─ Calls setupParsingConfig(currentFile.characterBuffer.fileNumber) [line 183]

│

└─> callParser(parsingConfig.fileNumber, parsingConfig.language) [line 190]8.3.4. LSP Mode Flow (New, Simplified)

parseToCreateReferences(fileName) [parsing.c:165]

├─ Takes fileName as PARAMETER (not from global!)

├─ Gets EditorBuffer

│

├─> initInput(NULL, buffer, "\n", fileName) [line 181]

│ └─ Sets currentFile.characterBuffer.fileNumber

│

├─> setupParsingConfig(currentFile.characterBuffer.fileNumber) [line 183]

│

└─> callParser(parsingConfig.fileNumber, parsingConfig.language) [line 190]8.3.5. Key Observations

Assignments to `inputFileName`

Server Mode: Set once per file processed

-

processFile()line 205 - sets the file for the current request -

Special case: macro completion may parse a different file (line 189) to resolve symbols in unexpanded macro bodies

Xref Mode: Set ONCE per file

-

oneWholeFileProcessing()line 181

`requestFileNumber` Only Used in Server Mode

-

Set in

prepareInputFileForRequest()(lines 104, 121) -

Used in

parseInputFile()line 136:setupParsingConfig(requestFileNumber) -

NOT used in Xref mode at all

Confusion: THREE Different File Number Globals!

inputFileName // The file name being processed

requestFileNumber // Server: file number from scheduled file (server.c)

currentFileNumber // Xref: file number after parsing starts (parsing.c:26)In xref.c line 160:

parsingConfig.fileNumber = currentFileNumber;But currentFileNumber is defined in parsing.c:26:

int currentFileNumber = -1; /* Currently parsed file, maybe a header file */The comment reveals the distinction: currentFileNumber can change DURING parsing when entering #include files, while requestFileNumber stays constant as "the file we were asked to process."

Double initInput() Call in Xref Mode

In Xref mode, initInput() is called TWICE for the same file:

-

First in

initializeFileProcessing()→computeAndOpenInputFile()[startup.c:128] -

Second in

parseToCreateReferences()[parsing.c:181]

This appears to be a bug or wasteful duplication.

`initializeFileProcessing` is Heavy Orchestration

This 500-line function does five major phases:

-

Phase 1: Project discovery (find

.c-xrefrc) -

Phase 2: Options processing

-

Phase 3: Compiler interrogation (expensive! runs

gcc -v) -

Phase 4: Memory checkpointing (to skip Phase 3 for same-project files)

-

Phase 5: Finally calls

computeAndOpenInputFile()→initInput()

The firstPass parameter gates Phase 4’s memory checkpoint save/restore.

Naming Inconsistency

-

inputFileName- used in both Server and Xref modes, but set in different places -

requestFileNumber- only Server mode, represents the file from the request -

currentFileNumber- only Xref mode(?), set byinitInput()after file opened

If they represent the same concept (the file being processed), they should have parallel names.

8.3.6. Summary: Multi-File vs Single-File Processing

Server Mode - Single File Per Request

-

Scheduling: All files scheduled ONCE in

initServer()→processFileArguments() -

Processing: Each request picks ONE file via

prepareInputFileForRequest()-

Uses

getNextScheduledFile()to get first scheduled file -

FLAWED: unschedules all higher-numbered files (works because

c-xref .schedules all) -

Sets both

inputFileNameandrequestFileNumber

-

-

Loop: Infinite request loop in

server()- different file per request

Xref Mode - All Files Per Invocation

-

Scheduling: All files scheduled in

mainTaskEntryInitialisations()→processFileArguments() -

Additional: In update mode,

scheduleModifiedFilesToUpdate()adds modified files -

Processing:

createListOfInputFileItems()creates list of ALL scheduled files-

Loops over entire list in

callXref()[line 314] -

Each file processed via

oneWholeFileProcessing() -

Sets

inputFileNamefor each file -

Uses

currentFileNumber(different global!) instead ofrequestFileNumber

-

-

Loop: Single invocation processes all files

LSP Mode - Single File Per Request

-

No scheduling: Takes fileName as direct parameter

-

No global state:

parseToCreateReferences(fileName)- clean interface -

Processing: Direct call, no file table lookup needed

-

Modern design: Avoids the legacy scheduling/global state complexity

8.3.7. Opportunities for Alignment

The Server and Xref paths do similar things but with different names and structures, creating cognitive overhead. Potential improvements:

-

Naming consistency:

processFile()(server) vsoneWholeFileProcessing()(xref) could both use consistent naming -

Eliminate duplication: Fix the double

initInput()call in xref path -

Extract common logic: The inner "process one file" logic should be identical between modes

-

Make differences explicit:

-

Server:

for (each request) { process one file } -

Xref:

for (each scheduled file) { process one file }

-

The paths are intertwined but different, making it hard to keep in your head which one you’re modifying. Making them more similar where possible would reduce cognitive load during refactoring work.

8.4. Parsers

C-xref uses a patched version of Berkley yacc to generate parsers. There are a number of parsers

-

C

-

Yacc

-

C pre-processor expressions

There might also exist small traces of the Java parser, which was

previously a part of the free c-xref, and the C++ parser that

existed but was proprietary.

The patch to byacc is mainly to the skeleton and seems to relate mostly to handling of errors and adding a recursive parsing feature that is required for Java, which was supported previously. It is not impossible that this patch might not be necessary now that Java parsing is not necessary, but this has not been tried.

Some changes are also made to be able to accomodate multiple parsers in the same executable, mostly solved by CPP macros renaming the parsing datastructures so that they can be accessed using the standard names in the parsing skeleton. The Makefile generates the parsers and renames the generated files as appropriate.

8.5. Integrated Preprocessor

C-xrefactory includes its own integrated C preprocessor implementation rather than using the system’s preprocessor (cpp, clang preprocessor, etc.). This is a crucial architectural decision that enables core functionality.

8.5.1. Why Not Use the System Preprocessor?

Using an external preprocessor would mean that all macros would be expanded before c-xrefactory sees the code. This would make it impossible to:

-

Navigate to macro definitions

-

Show macro usage and references

-

Refactor macro names and parameters

-

Complete macro identifiers

-

See macro arguments as distinct symbols

-

Understand the code structure as the programmer wrote it

By parsing the source at the macro level, c-xrefactory operates as a source-level tool rather than a post-preprocessed tool. This allows it to work with the code as developers see and write it, preserving all macro information for navigation and refactoring.

8.5.2. Implementation Details

The integrated preprocessor is implemented in yylex.c and includes:

-

Macro definition handling (

processDefineDirective()) -

Macro expansion with argument substitution

-

Conditional compilation (

#if,#ifdef,#ifndef,#elif,#else,#endif) -

Include file processing (

#include,#include_next) -

Pragma directives (limited support)

-

Expression evaluation for

#ifdirectives (cppexp_parser.y)

8.5.3. Limitations

The integrated preprocessor does not support all modern preprocessor features:

-

Platform-specific predefined macros (like

arm64,x86_64) are not automatically defined -

Some compiler-specific extensions may not be recognized (e.g.

has_feature(),building_module())

These limitations mean that c-xrefactory may report syntax errors when encountering modern platform headers that use these features, even though the code compiles correctly with standard compilers.

8.5.4. Trade-offs

This design choice represents a fundamental trade-off:

-

Gained: Full macro-level navigation and refactoring capabilities

-

Lost: Perfect compatibility with all preprocessor extensions and platform-specific features

The benefit of macro-level refactoring is considered more valuable than perfect preprocessor compatibility, as it is a key differentiator for c-xrefactory.

8.6. Refactoring and the parsers

Some refactorings need more detailed information about the code, maybe all do?

One example, at least, is parameter manipulation. Then the refactorer

calls the appropriate parser (serverEditParseBuffer()) which

collects information in the corresponding semantic actions. This

information is stored in various global variables, like

parameterBeginPosition.

The parser is filling out a ParsedInfo structure which conveys information that can be used e.g. when extracting functions etc.

| At this point I don’t understand exactly how this interaction is performed, there seems to be no way to parse only appropriate parts, so the whole file need to be re-parsed. |

Findings:

-

some global variables are set as a result of command line and arguments parsing, depending on which "command" the server is acting on

-

the semantic rules in the parser(s) contains code that matches these global variables and then inserts special lexems in the lexem stream

One example is how a Java 'move static method' was performed. It

requires a target position. That position is transferred from command

line options to global variables. When the Java parser was parsing a

class or similar it (or rather the lexer) looks at that "ddtarget

position information" and inserts a OL_MARKER_TOKEN in the stream.

| TODO: What extra "operation" the parsing should perform and return data for should be packaged into some type of "command" or parameter object that should be passed to the parser, rather than relying on global variables. |

8.7. Reading Files

Here are some speculations about how the complex file reading is structured.

Each file is identified by a filenumber, which is an index into the

file table, and seems to have a lexBuffer tied to it so that you can

just continue from where ever you were. That in turn contains a

CharacterBuffer that handles the actual character reading.

And there is also an "editorBuffer"…

The intricate interactions between these are hard to follow as the code here are littered with short character names which are copies of fields in the structures, and infested with many macros, probably in an ignorant attempt at optimizing. ("The root of all evil is premature optimization" and "Make it work, make it right, make it fast".)

It seems that everything start in initInput() in yylex.c where the

only existing call to fillFileDescriptor() is made. But you might

wonder why this function does some initial reading, this should be

pushed down to the buffers in the file descriptor.

8.7.1. Lexing/scanning

Lexing/scanning is performed in two layers, one in lexer.c which

seems to be doing the actual lexing into lexems which are put in a

lexembuffer. This contains a sequence of encoded and compressed

symbols which first has a LexemCode which is followed by extra data,

like Position. These seems to always be added but not always necessary.

The higher level "scanning" is performed, as per ususal,

by yylex.c. lexembuffer defines some functions to put and get

lexems, chars (identifiers and file names?) as well as integers and

positions.

At this point the put/get lexem functions take a pointer to a pointer to chars (which presumably is the lexem stream in the lexembuffer) which it also advances. This requires the caller to manage the LexemBuffer’s internal pointers outside and finally set them right when done.

It would be much better to call the "putLexem()"-functions with a

lexemBuffer but there seems to be a few cases where the destination

(often dd) is not a lexem stream inside a lexemBuffer. These might

be related to macro handling.

| This is a work-in-progress. Currently most of the "normal" usages are prepared to use the LexemBuffer’s pointers. But the handling of macros and defines are cases where the lexems are not put in a LexemBuffer. See the TODO.org for current status of this Mikado sequence. |

8.7.2. Semantic information

As the refactoring functions need some amount of semantic information,

in the sense of information gathered during parsing, this information

is collected in various ways when c-xref calls the "sub-task" to do

the parsing required.

Two structures hold information about various things, among which are

the memory index at certain points of the parsing. Thus it is possible

to verify e.g. that a editor region does not cover a break in block or

function structure. This structure is, at the point of writing, called

parsedInfo and definitely need to be tidied up.

8.8. Reference Database

c-xref run in "xref" mode creates, or updates, a database of

references for all externally visible symbols it encounters.

A good design should have a clean and generic interface to the reference database, but this is still a work in progress to chisel this out.

8.8.1. Architecture Overview

c-xrefactory’s core functionality relies on a sophisticated symbol database that stores cross-references, definitions, and usage information for all symbols in a project. The database is implemented as a collection of binary .cx files and supports:

-

Creation via

-createoperations that parse all project files -

Updates via

-updateoperations (incremental or full) -

Queries during symbol lookup operations

8.8.2. Database Structure and Format

The symbol database uses a hash-partitioned file structure:

cxrefs/

├── files # File metadata and paths

├── 0000 # Symbol data for hash bucket 0

├── 0001 # Symbol data for hash bucket 1

└── ... # Additional hash buckets based on referenceFileCountEach .cx file contains structured records with specific keys:

-

CXFI_FILE_NAME: File paths and metadata -

CXFI_SYMBOL_NAME: Symbol names, types, and attributes -

CXFI_REFERENCE: Individual symbol references with positions and usage types

8.8.3. Symbol Resolution Pipeline

The symbol lookup process follows this pipeline:

1. Cursor Position Input

↓

2. Parse current file to identify symbol at position

↓

3. scanReferencesToCreateMenu(symbolName)

↓

4. Load symbol data from .cx files

↓

5. Populate sessionData.browserStack

↓

6. Find best definition via olcxOrderRefsAndGotoDefinition()

↓

7. Navigate to definition position8.8.4. Key Data Structures

Browser Stack (sessionData.browserStack)

The browser stack is the runtime data structure for symbol navigation:

typedef struct {

OlcxReferences *top; // Current symbol being browsed

OlcxReferences *root; // Stack base

} OlcxReferencesStack;

typedef struct {

Reference *current; // Current reference

Reference *references; // All references for symbol

SymbolsMenu *symbolsMenu; // Available symbols for selection

Position callerPosition; // Where lookup was initiated

ServerOperation operation; // Type of operation (OLO_PUSH, etc.)

} OlcxReferences;Reference Information

typedef struct {

Position position; // File, line, column

Usage usage; // UsageDefined, UsageDeclared, UsageUsed

struct Reference *next; // Linked list of references

} Reference;

typedef struct {

char *linkName; // Symbol name with scope information

Type type; // Symbol type (function, variable, etc.)

Storage storage; // Storage class

Scope scope; // Visibility scope

int includedFileNumber; // File containing symbol

Reference *references; // All references to this symbol

} ReferenceItem;8.8.5. Database Operations

Database Creation (-create)

-

Parse all project files using C/Yacc parsers

-

Generate

ReferenceItementries for each symbol -

Create

Referenceentries for each symbol usage -

Write structured records to

.cxfiles -

Build hash-based index for fast lookup

Database Updates (-update)

-

Full Update: Rebuild entire database

-

Fast Update: Only process modified files based on timestamps

-

Incremental: Smart detection of changed dependencies

Symbol Lookup (OLO_PUSH operations)

-

scanReferencesToCreateMenu(symbolName)loads symbol data -

createSelectionMenu()builds navigation menus -

olProcessSelectedReferences()populates browser stack -

olcxOrderRefsAndGotoDefinition()finds best definition

8.8.6. LSP Integration Challenges

Architectural Mismatch

The current architecture was designed for batch processing and persistent databases, while LSP requires on-demand processing and responsive queries.

Current LSP Problems:

-

Persistent File Dependency: LSP

findDefinition()callsscanReferencesToCreateMenu()which expects pre-existing.cxfiles -

Project-Wide Requirement: Full project analysis required before individual file operations

-

Batch Processing Model: No support for incremental, per-request symbol resolution

-

Cold Start Problem: New projects have no

.cxfiles, causing all LSP operations to fail

Error Flow in LSP Mode:

LSP textDocument/definition request

↓

findDefinition() called

↓

scanReferencesToCreateMenu() called

↓

No .cx files exist → empty results

↓

browserStack remains empty

↓

All definition requests return same default positionPerformance Characteristics

| Operation | File-Based (.cx) | On-Demand Parsing |

|---|---|---|

Cold Start |

Requires |

Parse file immediately |

Warm Queries |

O(1) hash lookup |

O(file_size) parsing |

Memory Usage |

Low (streaming) |

High (in-memory cache) |

Incremental Updates |

Smart file tracking |

Per-file invalidation |

Multi-project |

Separate databases |

Workspace-scoped |

| A proposal for a unified symbol database architecture has been documented. See Proposed Unified Symbol Database in the Proposed Refactorings chapter. |

8.8.7. CXFILE

The current implementation of the reference database is file based, with an optimized storage format.

There is limited support to automatically keep these updated during an edit-compile cycle, you might have to update manually now and then.

The project settings (or command line options) indicate where the

file(s) are created and one option controls the number of files to be

used, -refnum.

This file (or files) contains compact, but textual representations of the cross-reference information. Format is somewhat complex, but here are somethings that I think I have found out:

-

the encoding has single character markers which are listed at the top of cxfile.c

-

the coding seems to often start with a number and then a character, such as '4l' (4 ell) means line 4, 23c mean column 23

-

references seems to be optimized to not repeat information if it would be a repetition, such as '15l3cr7cr' means that there are two references on line 15, one in column 3 the other in column 7

-

so there is a notion of "current" for all values which need not be repeated

-

e.g. references all use 'fsulc' fields, i.e. file, symbol index, usage, line and column, but do not repeat a 'fsulc' as long as it is the same

-

some "fields" have a length indicator before, such as filenames ('6:/abc.c') indicated by ':' and version information ('34v file format: C-xrefactory 1.6.0 ') indicated by 'v'.

So a line might say

12205f 1522108169p m1ia 84:/home/...

The line identifies the file with id 12205. The file was last included in an update of refs at sometime which is identified by 1522108169 (mtime), has not been part of a full update of xrefs, was mentioned on the command line. (I don’t know what the 'a' means…) Finally, the file name itself is 84 characters long.

| TODO: Build a tool to decipher this so that tests can query the generated data for expected data. This is now partly ongoing in the 'utils' directory. |

8.8.8. Reference Database Reading

All information about an externally visible symbol is stored in one, and only one reference file, determined by hashing the linkname of the symbol. So it will always suffice to read one reference file when consulting the reference database (in the form of CXFILE) for a symbol.

The reading of the CXFILE format is controlled by `scanFunctionTable`s. These consists of a list of entries, one for each key/tag/recordCode (see format description above) that the scan will process.

As the reference file reading encounters a key/tag/recordCode it will consult the table and see if there is an entry pointing to a handler function for that key/tag/recordCode. If so, it will be called.

8.9. Editor Plugin

The editor plugin has three different responsibilities:

-

serve as the UI for the user when interacting with certain

c-xrefrelated functions -

query

c-xref serverfor symbol references and support navigating these in the source -

initiate source code operations ("refactorings") and execute the resulting edits

Basically Emacs (and probably other editors) starts c-xref in

"server-mode" using -server which connects the editor

with c-xref through stdout/stdin. If you have (setq

c-xref-debug-mode t) this command is logged in the *Messages* buffer

with the prefix "calling:".

Commands are sent from the editor to the server on its standard input.

They looks very much like normal command line options, and in fact

c-xref will parse that input in the same way using the same

code. When the editor sends an end-of-options line, the server will

start executing whatever was sent, and return some information in the

file given as an -o option when the editor starts the c-xref

server process. The file is named and created by the editor and

usually resides in /tmp. With c-xref-debug-mode set to on this is

logged as "sending:". If you (setq c-xref-debug-preserve-tmp-files

t) Emacs will also not delete the temporary files it creates so that

you can inspect them afterwards.

When the server has finished processing the command and placed the

output in the output file it sends a <sync> reply.

The editor can then pick up the result from the output file and do what it needs to do with it ("dispatching:").

8.9.1. Invocations

The editor invokes a new c-xref process for the following cases:

-

Refactoring

Each refactoring operation calls a new instance of

c-xref? -

Create Project

When a

c-xreffunction is executed in the editor and there is no project covering that file, an interactive "create project" session is started, which is run by a separatec-xrefprocess.

8.9.2. Buffers

There is some magical editor buffer management happening inside of

c-xref which is not clear to me at this point. Basically it looks

like the editor-side tries to keep the server in sync with which

buffers are opened with what file…

At this point I suspect that -preload <file1> <file2> means that the

editor has saved a copy of <file1> in <file2> and requests the server

to set up a "buffer" describing that file and use it instead of the

<file1> that recides on disk.

This is essential when doing refactoring since the version of the file

most likely only exists in the editor, so the editor has to tell the

server the current content somehow, this is the -preload option.

8.10. Editor Server

When serving an editor the c-xrefactory application is divided into

the server, c-xref and the editor part, at this point only Emacs:en

are supported so that’s implemented in the

editor/Emacs-packages.

8.10.1. Interaction

The initial invocation of the edit server creates a process with which communication is over stdin/stdout using a protocol which from the editor is basically a version of the command line options.

When the editor has delivered all information to the server it sends

'end-of-option' as a command and the edit server processes whatever it

has and responds with <sync> which means that the editor can fetch

the result in the file it named as the output file using the '-o'

option.

| As long as the communication between the editor and the server is open, the same output file will be used. This makes it hard to catch some interactions, since an editor operation might result in multiple interactions, and the output file is then re-used. |

Setting the emacs variable c-xref-debug-mode forces the editor to

copy the content of such an output file to a separate temporary file

before re-using it.

For some interactions the editor starts a completely new and fresh

c-xref process, see below. And actually you can’t do refactorings

using the server, they have to be separate calls. (Yes?) I have yet to

discover why this design choice was made.

There are many things in the sources that handles refactorings

separately, such as refactoring_options, which is a separate copy of

the options structure used only when refactoring.

|

8.10.2. Protocol

Communication between the editor and the server is performed using

text through standard input/output to/from c-xref. The protocol is

defined in src/protocol.tc and must match editor/emacs/c-xrefprotocol.el.

The definition of the protocol only caters for the server→editor part, the editor→server part consists of command lines resembling the command line options and arguments, and actually is handled by the same code.

The file protocol.tc is included in protocol.h and protocol.c

which generates definitions and declarations for the elements through

using some macros.

There is a similar structure with c-xrefprotocol.elt which

includes protocol.tc to wrap the PROTOCOL_ITEMs into

defvars.

There is also some Makefile trickery that ensures that the C and elisp impementations are in sync.

One noteable detail of the protocol is that it carries strings in their native format, utf-8. This means that lengths need to indicate characters not bytes.

8.10.3. Invocation of server

The editor fires up a server and keeps talking over the established channel (elisp function 'c-xref-start-server-process'). This probably puts extra demands on the memory management in the server, since it might need to handle multiple information sets and options (as read from a .cxrefrc-file) for multiple projects simultaneously over a longer period of time. (E.g. if the user enters the editor starting with one project and then continues to work on another then new project options need to be read, and new reference information be generated, read and cached.)

| TODO: Figure out and describe how this works by looking at the elisp-sources. |

FINDINGS:

-

c-xref-start-server-process in c-xref.el

-

c-xref-send-data-to-running-process in c-xref.el

-

c-xref-server-call-refactoring-task in c-xref.el

8.10.4. Communication Protocol

The editor server is started using the appropriate command line option and then it keeps the communication over stdin/stdout open.

The editor part sends command line options to the server, which looks something like (from the read_xrefs test case):

-encoding=european -olcxpush -urldirect "-preload" "<file>" "-olmark=0" "-olcursor=6" "<file>" -xrefrc ".c-xrefrc" -p "<project>" end-of-options

In this case the "-olcxpush" is the operative command which results in the following output

<goto> <position-lc line=1 col=4 len=66>CURDIR/single_int1.c</position-lc> </goto>

As we can see from this interaction, the server will handle (all?) input as a command line and manage the options as if it was a command line invocation.

This explains the intricate interactions between the main program and the option handling.

The reason behind this might be that a user of the editor might be editing files on multiple projects at once, so every interrogation/operation needs to clearly set the context of that operation, which is what a user would do with the command line options.

8.10.5. OLCX Naming

It seems that all on-line editing server functions have an olcx

prefix, "On-Line C-Xrefactory", maybe…

8.11. Refactoring

This is of course, the core in why I want to restore this, to get at its refactoring capabilities. So far, much is not understood, but here are some bits and pieces.

8.11.1. Editor interface

One thing that really confused me in the beginning was that the editor, primarily Emacs, don’t use the actual server that it has started for refactoring operations (and perhaps for other things also?). Instead it creates a separate instance with which it talks to about one refactoring.

I’ve just managed to create the first automatic test for refactorings, olcx_refactory_rename. It was created by running the sandboxed emacs to record the communication and thus finding the commands to use.

Based on this learning it seems that a refactoring typically is a single invocation of c-xref with appropriate arguments (start & stop markers, the operation, and so on) and the server then answers with a sequence of operations, like

<goto>

<position-off off=3 len=<n>>CURDIR/test_source/single_int1.c</position-off>

</goto>

<precheck len=<n>> single_int_on_line_1_col_4;</precheck>

<replacement>

<str len=<n>>single_int_on_line_1_col_4</str> <str len=<n>>single_int_on_line_1_col_44</str>

</replacement>8.11.2. Interactions

I haven’t investigated the internal flow of such a sequence, but it is starting to look like c-xref is internally re-reading the initialization, I’m not at this point sure what this means, I hope it’s not internal recursion…

8.11.3. Extraction

Each type of refactoring has it’s own little "language". E.g. extracting a method/function using -refactory -rfct-extract-function will return something like

<extraction-dialog type=newFunction_> <str len=20> newFunction_(str);

</str>

<str len=39>static void newFunction_(char str[]) {

</str>

<str len=3>}

</str>

<int val=2 len=0></int>

</extraction-dialog>So there is much logic in the editor for this. I suspect that the three <str> parts are

-

what to replace the current region with

-

what to place before the current region

-

what to place after the current region

If this is correct then all extractions copy the region verbatim and then the server only have to figure out how to "glue" that to a semantically correct call/argument list.

As a side note the editor asks for a new name for the function and then calls the edit server with a rename request (having preloaded the new source file(s) of course).

8.11.4. Protocol

Dechiffrering the interaction between an editor and the edit server in

c-xrefactory isn’t easy. The protocol isn’t very clear or

concise. Here I’m starting to collect the important bits of the

invocation, the required and relevant options and the returned

information.

The test cases for various refactoring operations should give you some more details.

All of these require a -p (project) option to know which c-xref

project options to read.

General Principles

Refactorings are done using a separate invocation, the edit server mode cannot handle refactorings. At least that is how the Emacs client does it (haven’t looked at the Jedit version).

I suspect that it once was a single server that did both the symbol

management and the refactoring as there are remnants of a separate

instance of the option structure named "refactoringOptions". Also the

check for the refactoring mode is done using

options.refactoringRegime == RegimeRefactory which seems strange.

Anyway, if the refactoring succeeds the suggested edits is as per usual in the communications buffer.

However, there are a couple of cases where the communcation does not end there. Possibly because the client needs to communicate some information back before the refactoring server can finish the job, like presenting some menu selection.

My guess at this point is that it is the refactoring server that closes the connection when it is done…

Rename

Invocation: -rfct-rename -renameto=NEW_NAME -olcursor=POSITION FILE

Semantics: The symbol under the cursor (at POSITION in FILE) should be renamed (replaced at all occurrences) by NEW_NAME.

Result: sequence of

<goto>

<position-off off=POSITION len=N>FILE</position-off>

</goto>

<precheck len=N>STRING</precheck>followed by sequence of

<goto>

<position-off off=POSITION len=N>FILE</position-off>

</goto>

<replacement>

<str len=N>ORIGINAL</str> <str len=N>REPLACEMENT</str>

</replacement>Protocol Messages

- <goto>{position-off}</goto> → editor

-

Request the editor to move cursor to the indicated position (file, position).

- <precheck len={int}>{string}</precheck> → editor

-

Requests that the editor verifies that the text under the cursor matches the string.

- <replacement>{str}{str}</replacement>

-

Requests that the editor replaces the string under the cursor, which should be 'string1', with 'string2'.

- <position-off off={int} len={int}>{absolute path to file}</position-off>

-

Indicates a position in the given file. 'off' is the character position in the file.

8.12. Memory handling

c-xrefactory uses custom memory management via arena allocators rather than malloc/free for performance-critical operations.

See the Modules chapter for the design and architecture of the Memory module, and the Data Structures chapter for details on the arena allocator data structure and allocation model.

For debugging memory issues, especially arena lifetime violations, see the Development Environment chapter.

8.12.1. The Memory Type

Memory allocation is managed through the Memory structure, which implements an arena/bump allocator. Different memory arenas serve different purposes:

-

cxMemory- Cross-reference database (with overflow handling) -

ppmMemory- Preprocessor macro expansion -

macroBodyMemory- Macro definition storage -

macroArgumentsMemory- Macro argument expansion -

fileTableMemory- File table entries

See Modules chapter for detailed description of each arena’s purpose and lifetime.

8.12.2. Option Memory

The optMemory arena requires special handling because Options structures are copied during operation. When copying, all pointers into option memory must be adjusted to point into the target structure’s memory area, not the source’s.

Functions like copyOptions() perform this pointer adjustment through careful memory arithmetic, traversing a linked list of all memory locations that need updating.

| The linked list nodes themselves are allocated in the Options structure’s dynamic memory. |

8.13. Configuration

The legacy c-xref normally, in "production", uses a common configuration file in the

users home directory, .c-xrefrc. When a new project is defined its options will be

stored in this file as a new section.

It is possible to point to a specific configuration file using the command line option

-xrefrc which is used extensively in the tests to isolate them from the users

configuration.

Each "project" or "section" requires a name of the "project", which is the argument to

the -p command line option. And it may contain most other command line options on one

line each. These are always read, unless -no-stdop is used, before anything else. This

allows for different "default" options for each project.

8.13.1. Options

There are three possible sources for options.

-

Configuration files (~/.c-xrefrc)

-

Command line options at invocation, including server

-

Piped options sent to the server in commands

Not all options are relevant in all cases.

All options sources uses exactly the same format so that the same code for decoding them can be used.

8.13.2. Logic

When the editor has a file open it needs to "belong" to a project. The logic for finding which is very intricate and complicated.

In this code there is also checks for things like if the file is already in the index, if the configuration file has changed since last time, indicating there are scenarios that are more complicated (the server, obviously).

But I also think this code should be simplified a lot.

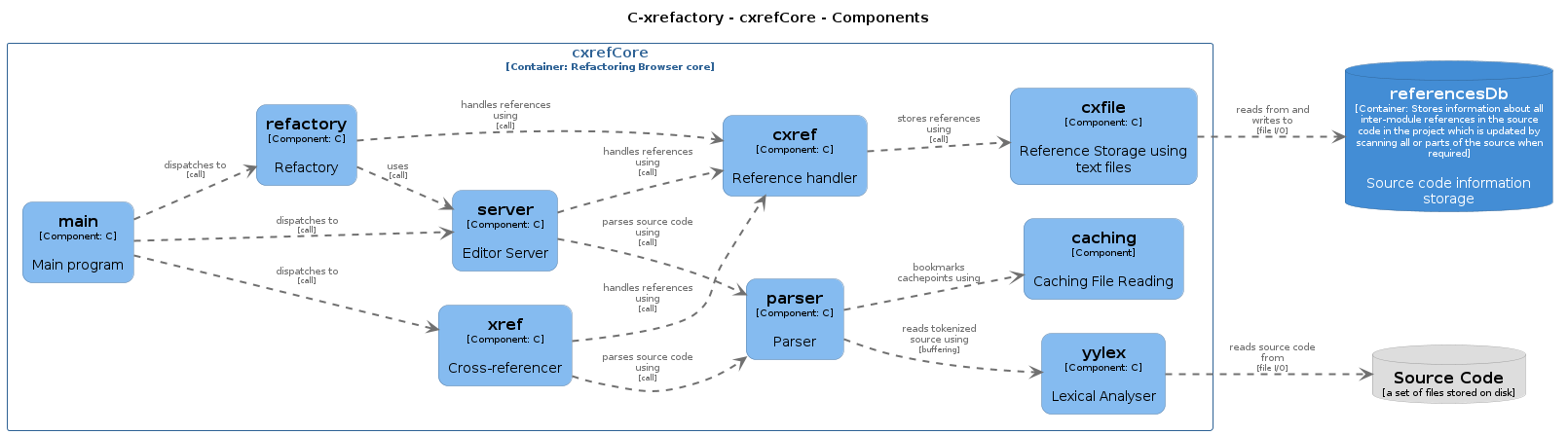

9. Modules

The current state of c-xrefactory is not such that clean modules can

easily be identified and located. This is obviously one important goal

of the continuing refactoring work.

To be able to do that we need to understand the functionality enough so that clusters of code can be refactored to be more and more clear in terms of responsibilities and interfaces.

This section makes a stab at identifying some candidated to modules,

as illustrated by the component diagram for cxrefCore.

9.1. Yylex

9.1.1. Responsibilities

-

Transform source text to sequences of lexems and additional information

-

Register and apply C pre-processor macros and defines as well as defines made as command line options (

-D) -

Handle include files by pushing and poping read contexts

9.1.2. Interface

The yylex module has the standard interface required by any

yacc-based parser, which is a simple yylex(void) function.

9.2. Parser

9.3. Xref

9.4. Server

9.5. Refactory

9.6. Extract

Analyzes control flow for extract-function/macro/variable refactoring. Operates in two phases: collection during parsing (registers synthetic labels, gathers references) and analysis after parsing (classifies variables, generates output).

9.7. Cxref

9.8. Main

9.9. Memory

9.9.1. Responsibilities

The Memory module provides arena-based allocation for performance-critical and request-scoped operations:

-

Fast allocation for macro expansion and lexical analysis

-

Bulk deallocation for request-scoped cleanup

-

Multiple specialized arenas for different data lifetimes

-

Overflow detection and optional dynamic resizing

9.9.2. Design Rationale

Historical Context

In the 1990s when c-xrefactory originated, memory was scarce. The design had to:

-

Minimize allocation overhead (no malloc/free per token)

-

Support large projects despite limited RAM

-

Allow overflow recovery via flushing and reuse

-

Enable efficient bulk cleanup

Most memory arenas use statically allocated areas. Only cxMemory supports dynamic resizing to handle out-of-memory situations by discarding, flushing and reusing memory. This forced implementation of a complex caching strategy since overflow could happen mid-file.

Modern Benefits

Even with abundant modern memory, arena allocators provide:

-

Performance: Bump pointer allocation is ~10x faster than malloc

-

Cache locality: Related data allocated contiguously

-

Automatic cleanup: Bulk deallocation prevents leaks

-

Request scoping: Natural fit for parsing/expansion operations

9.9.3. Arena Types and Lifetimes

| Arena | Purpose | Lifetime |

|---|---|---|

cxMemory |

Symbol database, reference tables, cross-reference data |

File or session |

ppmMemory |

Preprocessor macro expansion buffers (temporary allocations) |

Per macro expansion |

macroBodyMemory |

Macro definition storage |

Session |

macroArgumentsMemory |

Macro argument expansion |

Per macro invocation |

fileTableMemory |

File metadata and paths |

Session |

optMemory |

Command-line and config option strings (with special pointer adjustment) |

Session |

9.9.4. Key Design Patterns

Marker-Based Cleanup

Functions save a marker before temporary allocations:

char *marker = ppmAllocc(0); // Save current index

// ... temporary allocations ...

ppmFreeUntil(marker); // Bulk cleanupBuffer Growth Pattern

Long-lived buffers that may need to grow:

// Allocate initial buffer

bufferDesc.buffer = ppmAllocc(initialSize);

// ... use buffer, may need growth ...

// Free temporaries FIRST

ppmFreeUntil(marker);

// NOW buffer can grow (it's at top-of-stack)

expandPreprocessorBufferIfOverflow(&bufferDesc, writePointer);Overflow Handling

The cxMemory arena supports dynamic resizing:

bool cxMemoryOverflowHandler(int n) {

// Attempt to resize arena

// Return true if successful

}

memoryInit(&cxMemory, "cxMemory", cxMemoryOverflowHandler, initialSize);When overflow occurs, handler can:

-

Resize the arena (if within limits)

-

Flush old data and reset

-

Signal failure (fatal error)

9.9.5. Interface

Key functions (see memory.h):

// Initialization

void memoryInit(Memory *memory, char *name,

bool (*overflowHandler)(int n), int size);

// Allocation

void *memoryAlloc(Memory *memory, size_t size);

void *memoryAllocc(Memory *memory, int count, size_t size);

// Reallocation (only for most recent allocation)

void *memoryRealloc(Memory *memory, void *pointer,

size_t oldSize, size_t newSize);

// Bulk deallocation

size_t memoryFreeUntil(Memory *memory, void *marker);

// Guards

bool memoryIsAtTop(Memory *memory, void *pointer, size_t size);9.9.6. Common Pitfalls

See the "Arena Allocator Lifetime Violations" section in the Development Environment chapter for:

-

Attempting to resize buffers not at top-of-stack

-

Calling

FreeUntil()too late -

Mixing arena lifetimes

9.9.7. Future Directions

Modern systems have abundant virtual memory. Possible improvements:

-

Simplify overflow handling - Allocate larger initial arenas

-

Separate lifetime management - Don’t mix temporary and long-lived allocations

-

Consider alternatives - Linear allocators for some use cases

-

Add debug modes - Track allocation patterns and detect violations

The experimental FlushableMemory type explores some of these ideas but hasn’t replaced current implementation.

9.10. Cxfile

9.10.1. Responsibilities

Read and write the CXref database in "plain" text format.

9.10.2. File format

The current file format for the cross-reference data consists of records with the general format

<number><key>[<value>]

There are two important types of lines, a file information line and a symbol information line.

The actual keys are documented in cxfile.c, but here is an example

file information line:

32571f 1715027668m 21:/usr/include/ctype.h

First we have two simple value/key pairs. We see "32571f" indicating that this is file information for file with file number 32571.

Secondly we have "1715027668m". This is the modification time of the file which is stored to be able to see if that file has been updated since the reference database was last written.

And the third part is "21:/usr/include/ctype.h", which is of a record type that is a bit more complex. The number is the length of the value. The ':' indicates that the record is a filename.

9.11. c-xref.el

9.12. c-xrefactory.el

10. Data Structures

There are a lot of different data structures used in c-xrefactory.

This is a first step towards visualising some of them.

10.1. ReferenceableItem and Reference: Core Domain Concepts

These are the fundamental cross-reference data structures that represent the "what" and "where" of code entities.

10.1.1. ReferenceableItem

A ReferenceableItem represents a referenceable entity in the codebase - something that can be referenced from multiple locations:

-

Functions and variables

-

Types (structs, unions, enums, typedefs)

-

Macros

-

Include directives (special case:

TypeCppInclude) -

Yacc non-terminals and rules

Each ReferenceableItem contains:

-

linkName- Fully qualified name (e.g.,"MyClass::method") -

type- What kind of entity (function, variable, type, etc.) -

storage,scope,visibility- Language properties -

includeFile- ForTypeCppIncludeitems, which file is being included -

references- Linked list of allReference(occurrences) of this entity

ReferenceableItems are stored in the referenceableItemTable (hash table) and persisted to .cx files.

10.1.2. Reference (Occurrence)

A Reference represents a single occurrence of a ReferenceableItem at a specific location:

-

position- File, line, and column where this occurrence appears -

usage- How it’s used (definition, declaration, usage, etc.) -

next- Pointer to next occurrence in the list

Each ReferenceableItem maintains a linked list of all its References, allowing you to find every place that entity appears in the codebase.

Note: The term "Reference" in this context means "occurrence" - one specific use of an entity at one location. This is distinct from C++ references or reference semantics.

10.2. Symbol (Parser Symbol Table)

There is also a structure called Symbol. This is separate from ReferenceableItem and serves a different purpose:

Symbol - Parser-level symbol table entry (temporary, exists only during parsing):

-

Used by the C/Yacc parser for semantic analysis

-

Contains type information, position, storage class

-

Lives in

symbolTable(hash table) during file parsing -

Not persisted - discarded after parsing completes

ReferenceableItem - Cross-reference entity (persistent across entire codebase):

-

Created FROM Symbol properties when a referenceable construct is found

-

Stored in

referenceableItemTableand saved to.cxfiles -

Accumulates References from all files in the project

Relationship: During parsing, when the parser encounters a referenceable symbol (function, variable, etc.), it:

-

Creates a

SymbolinsymbolTablefor semantic analysis -

Creates or finds a

ReferenceableItemby copying Symbol properties -

Adds a

Referenceto that ReferenceableItem’s list -

Discards the Symbol when parsing completes

This separation allows the parser to maintain its own temporary symbol table while building the persistent cross-reference database.

10.3. Files and Buffers

Many strange things are going on with reading files so that is not completely understood yet.

Here is an initial attempt at illustrating how some of the file and text/lexem buffers are related.

It would be nice if the LexemStream structure could point to a

LexemBuffer instead of holding separate pointers which are

impossible to know what they actually point to…

|

| This could be achieved if we could remove the CharacterBuffer from LexemBuffer and make that a reference instead of a composition. Then we’d need to add a CharacterBuffer to the structures that has a LexemBuffer as a component (if they use it). |

10.4. Modes

c-xrefactory operates in different modes ("regimes" in original

c-xref parlance):

-

xref - batch mode reference generation

-

server - editor server

-

refactory - refactory browser

The default mode is "xref". The command line options -server and -refactory

selects one of the other modes. Branching is done in the final lines in

main().

The code for the modes are intertwined, probably through re-use of already existing functionality when extending to a refactoring browser.

One evidence for this is that the refactory module calls the "main task" as a "sub-task". This forces some intricate fiddling with the options data structure, like copying it. Which I don’t fully understand yet.

TODO?: Strip away the various "regimes" into more separated concerns and handle options differently.

10.5. Options

The Options datastructure is used to collect options from the

command line as well as from options/configuration files and piped

options from the editor client using process-to-process

communication.

It consists of a collection of fields of the types

-

elementary types (bool, int, …)

-

string (pointers to strings)

-

lists of strings (linked lists of pointers to strings)

10.5.1. Allocation & Copying

Options has its own allocation using optAlloc which allocates in a

separate area, currently part of the options structure and utilizing

"dynamic allocation" (dm_ functions on the Memory structure).

The Options structure are copied multiple times during a session, both

as a backup (savedOptions) and into a separate options structure

used by the Refactorer (refactoringOptions).

Since the options memory is then also copied, all pointers into the options memory need to be updated. To be able to do this, the options structure contains lists of addresses that needs to by "shifted".

When an option with a string or string list value is modified the option is registered in either the list of string valued options or the list of string list valued options. When an options structure is copied it must be performed using a deep copy function which "shifts" those options and their values (areas in the options memory) in the copy so that they point into the memory area of the copy, not the original.

After the deep copy the following point into the option memory of the copy

-

the lists of string and string list valued options (option fields)

-

all string and string valued option fields that are used (allocated)

-

all list nodes for the used option (allocated)

-

all list nodes for the string lists (allocated)

10.6. Arena Allocators (Memory)

Arena allocators (also called region-based or bump allocators) are the fundamental memory management strategy used throughout c-xrefactory for performance-critical operations like macro expansion and lexical analysis.

10.6.1. The Memory Structure

typedef struct memory {

char *name; // Arena name for diagnostics

bool (*overflowHandler)(int n); // Optional resize handler

int index; // Next allocation offset (bump pointer)

int max; // High-water mark

size_t size; // Total arena size

char *area; // Actual memory region

} Memory;10.6.2. Allocation Model

Arena allocators use bump pointer allocation:

-

Allocation: Return

&area[index], thenindex += size -

Deallocation: Bulk rollback via

FreeUntil(marker) -

Reallocation: Only possible for most recent allocation

This is extremely fast (O(1) allocation) but requires stack-like discipline for deallocation.

10.6.3. Stack-Like Discipline

Arenas follow LIFO (last-in-first-out) cleanup:

marker = ppmAllocc(0); // Save current index

temp1 = ppmAllocc(100); // Allocate

temp2 = ppmAllocc(200); // Allocate

// Use temp1, temp2...

ppmFreeUntil(marker); // Free both temp1 and temp210.6.4. Key Constraint: Top-of-Stack Reallocation

Only the most recent allocation can be resized:

buffer = ppmAllocc(1000); // Allocate buffer

temp = ppmAllocc(500); // Allocate temporary

ppmReallocc(buffer, ...); // ❌ FAILS - buffer not at topThis constraint is enforced by guards in memory.c (see Development Environment chapter).

10.6.5. Memory Arena Types

c-xrefactory uses specialized arenas for different purposes (see Modules chapter for details):

-

cxMemory- Cross-reference database and symbol tables -

ppmMemory- Preprocessor macro expansion (temporary) -

macroBodyMemory- Macro body buffers -

macroArgumentsMemory- Macro argument expansion -

fileTableMemory- File table entries -

optMemory- Option strings (with special pointer adjustment)

10.7. Preload Mechanism

The preload mechanism allows the server to work with editor buffer contents that haven’t been saved to disk. This is essential for providing real-time symbol navigation and completion while the user is actively editing.

10.7.1. How It Works

When an editor buffer is modified but not yet saved:

-

Editor Action: The Emacs client writes the current buffer content to a temporary file

-

Server Request: The client sends a request with

-preload <filename> <tmpfile>options -

Buffer Association: The server creates an

EditorBufferstructure linking the on-disk filename to the temporary file containing the actual content -

Transparent Parsing: When the server needs to parse the file, it transparently reads from the temporary file instead of the on-disk file

10.7.2. Why It’s Needed

Without preload, the server would only see the last saved version of the file. The preload mechanism ensures that:

-

Symbol navigation works with the current buffer state

-

Completion suggests symbols based on what’s actually typed

-

Refactorings operate on the current code, not stale saved content

-

Users get immediate feedback without having to save constantly

10.7.3. Reference Management

When a file is preloaded, the server must handle reference updates carefully:

-

Old references from the previous file version must be removed from the reference table before parsing

-

This prevents duplicate references (one set at old positions, another at new positions)

-

The removal happens in

removeReferencesForFile()when preloaded content is detected

10.8. Browser Stack

The browser stack maintains navigation history for symbol references, allowing users to browse through code by pushing symbol lookups and navigating back through previous queries.

10.8.1. Structure

The browser stack is a linked list of OlcxReferences entries, where each entry represents a symbol lookup session:

-

Stack entries contain complete symbol information and reference lists for one navigation session

-

Top pointer indicates the current active entry being navigated

-

Root pointer tracks the base of the stack (most recent entry still available)

-

Entries between root and top are "future" navigation states that can be returned to via "next"

10.8.2. Lifecycle

-

Push: When user requests symbol references (e.g.,

-olcxpush), a new empty entry is created on the stack -

Population: After parsing, the entry is filled with

BrowserMenustructures containing references -

Navigation: Commands like

-olcxnextand-olcxpreviousmove through references in the current entry -

Pop: User can pop back to previous entries to return to earlier symbol lookups

10.8.3. Relationship to Parsing

The browser stack is populated in two stages:

-

Parse-time: References are collected in the referenceableItemTable during file parsing

-

Menu Creation: ReferenceableItems are wrapped in BrowserMenu structures and added to the browser stack entry via

putOnLineLoadedReferences()

This separation means that browser stack entries can become stale if files are reparsed (e.g., with preloaded content) without refreshing the stack. Users typically need to pop and re-push to get fresh reference lists after edits.

10.9. Browser Menu

A browser menu is a navigable list of referenceable items with their occurrences, organized for presentation to the user in Emacs. Multiple items may appear in a single menu when name resolution finds several candidates (e.g., symbols with the same name in different scopes).